POWER READ

More Than Meets the AI

Gain Actionable Insights Into:

- How revolutionary AI really is

- Machine Learning, Data Science, Neural Networks and their differences

- The real difference between a data scientist and a software engineer

Common AI Terminologies to Get Right

Terms like AI, Machine Learning or Data Science are often used interchangeably in newspapers, businesses, and job advertisements. This creates false expectations about what these algorithms can really do.

It started a few years ago with Data Science, which only meant performing traditional data analysis on an increasingly larger dataset.

Most industries already had a Data Science team back then, but the tools they were using pertained more to the field of statistics than computer science. Soon, the attention shifted more towards computer science, mostly due to the technical challenge of analysing large datasets. Nevertheless, predictive modelling in Data Science, which uses statistics to predict outcomes, remained mostly similar to the one used in traditional statistics, like principal component analysis, regression, K-means, random forests, and Xgboost. Since these algorithms learn a set of “rules” from data, they have been dubbed as Machine Learning algorithms to emphasise that these rules are not hard coded by the programmer.

Machine Learning

Machine Learning is not as new as people think it is, it spanned at least 60 years of research and development. Some people think of Machine Learning algorithms as traditional software; the reality is that a Machine Learning project is built around data. For this reason, a development process in Machine Learning is more similar to a research project than a software development one.

Imagine that you are in your lab doing some experiment; you have an initial idea, a hypothesis or a question you need to verify by collecting data, but you don’t know if your picture is right, or if the steps you’re going to follow are correct. By measuring the outcome of the experiment, you may need to change your approach, your initial idea or even challenge established knowledge. This process may be long or short, successful or not, and it is really difficult to say before you start collecting and analysing data. In the data science world, this phase is called “exploratory analysis”, followed by data analysis and modelling (these last two may be part of the same process).

While natural sciences experiments can be performed in a controlled environment, the data we work with in the Data Science domain are often “dirty”; meaning they contain a substantial portion of random or systematic errors, they are often incomplete and are generally collected by third parties under often unknown conditions. While the former sources of errors may be accounted for within the statistical analysis (systematic errors are trickier and not often correctly taken into account), the latter are impossible to consider. Data may have been collected under a certain set of hypotheses that led to biased results. This makes the development part of the Data Science workflow very dependent on data and often difficult to formalise at the very beginning of the project.

All these issues are absent in a traditional software development workflow that does not depend on the quality or structure of data but only on the project's needs, e.g. when the user interacts in this way, the software responds in this other way.

Some of the products that come out from Machine Learning include email spam detectors, Google’s search recommendations, and business insights on how to efficiently store goods, deliver your orders or efficiently take you from one place to the other with services like Uber, Grab or Lyft.

Neural Networks

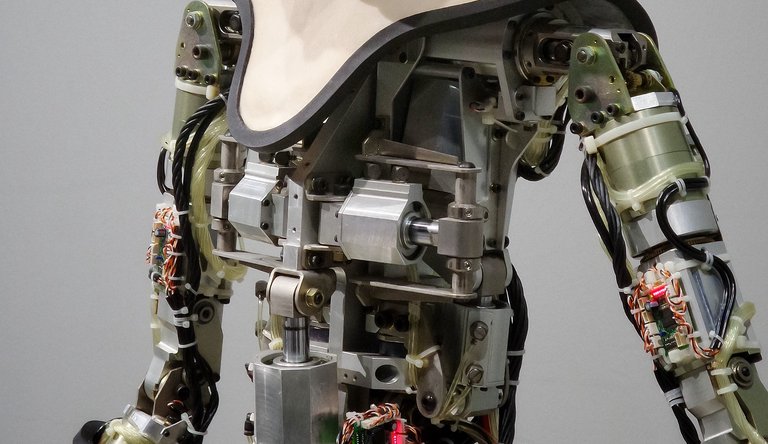

Neural Networks (NN) were originally born as a simplified representation of how the human brain works.

The basic computational unit of a NN is a simplified version of a neuron (a nerve cell), collecting data from external sources, processing them and returning the desired answer. The way the network learns also defines its structure, traditionally classified as supervised or unsupervised, although other intermediate options exist. The former learns from example, i.e. we need to give the NN both the question and its answer to “train it” until it is able to generalize to new questions for which the answer is not known. So far, this has been the most successful form of learning, especially for applications. The latter form of learning is only given an input dataset and needs to find structures by itself. Although unsupervised learning is more interesting, it is still in the research stage.

A NN has many parameters that need to be fixed (even millions!); those that are fixed during training are called “learnable parameters”, while those that need to be fixed “by hand” (or using some other software) are called hyperparameters. These are not really part of the model, but they define how learning is going to work; they are defined through a lengthy exploration that can take on a considerable time out of all Data Science processes.

Often, the initial path we try ends up failing and new directions need to be explored. Sometimes, the client’s expectations in terms of model accuracy is based on results coming from different datasets, but the reality is that the data structures and quality really change the performance of a model in a non-trivial way.

Finally, models that perform well sometimes cannot be used in production due to other constraints such as: Do the results need to be curated in real time? How fast is fast? Where is the data going to be stored and how is the model going to be deployed? Ultimately, these aspects can have a big impact in the model selection process.

While Machine Learning works well for many traditional domains, it doesn’t fare too well when it comes to computer vision due to the inability to gather information from visual imagery or Natural Language Processing tasks (understand and generate languages). This is where Neural Networks really outperform traditional statistical analysis. These algorithms try to mimic the way neurons in the brain work, so they have more of an ability to gather information from such sources. This is why many people in the field think that they are the gateway to AI.

Although Neural Networks have amazing generalisation capabilities compared to traditional Machine Learning algorithms, they still fail to generalise as well as humans do. For example, in image recognition tasks, we train the Neural Network to recognise whether an object is a car, a dog or something else. Although we may obtain very good prediction accuracy on the test set, we usually observe a drop in accuracy when the model is asked to infer on data coming from a different distribution or if the data have been slightly corrupted (also known as adversarial attack). This is also true for speech recognition tasks and in particular for conversational AI, where most algorithms do not really understand the meaning of a sentence, or most importantly, how to link sentences used at different stages of the conversation.

Present and Future of AI

At the moment, AI cannot be thought of as a silver bullet. But as a toolset, it’s very valuable in its domain of validity. Machine Learning algorithms can help people and businesses in several ways, and as technology progresses, we will see them gradually integrate with other technologies. For example, modern search engines are infused with Machine Learning technologies that help them better classify the content of a webpage, an image or a video and therefore return with more accurate results. Language translation is another field where Machine Learning has really made a difference, allowing us effortless communication when traveling.

Despite the many applications of Machine Learning, they tend to stick to tasks where mistakes do not have serious consequences. If an image is wrongly classified or an AI voice assistant does not understand what we mean, the repercussions are minimal.

Ideally, we would like our models to make decisions that are more accurate and faster than us. However, the Neural Network does not always do so, and therefore we don’t have the necessary trust to give these algorithms total control on business and life decisions. For example, despite much effort from top financial services, Neural Network models are not used much in this field, as analysts prefer to rely on more traditional Machine Learning methods and domain knowledge.

Increasingly Integrated

Typically, a data scientist comes from a scientific background like computer science, maths, and physics, where model building and data analysis is essential. The main scope of the data scientist is to provide insights on dataset and build predictive models. Although coding is part of the data scientist's job, it is usually not their primary job to build efficient and production scale codes.

On the other hand, a software engineer is more concerned in writing software. Usually a software engineer hardcodes the operations for the system to perform, and therefore there is no need to look at the data. The job is generally more structured than a data scientist’s job, with clearer short-term goals and timelines.

Having said that, the distinction between a data scientist and a software engineer is slowly disappearing. For example, a Machine Learning scientist is now expected to come up with new algorithms from a conceptual point of view, code them, and test their performance. They also help to refine the code structure and its performance on different architectures.

Personally, I prefer to keep the two roles separate. While it’s cheaper for a company to have someone who does both, it may lower the overall quality of output. Simply put, the wider your preparation, the more superficial your knowledge may become. So, optimally, these two professions need to communicate and collaborate closely with each other for the best product to be developed. So, while the former is concerned in solving a certain problem, the latter takes care of the deployment aspects that are possibly on cloud infrastructures.

Much Needed Improvements

In 3-5 years, we can expect to see more understandable and stable AI. Although we’re able to build these computational models, we find it challenging to understand the AI’s decision-making process. It’s like having somebody propose a solution that he or she is unable to explain. And this explanation is a cog in the wheel; without it, we’re unable to completely trust an AI to make the right choices for us.

Also, the proposed solutions are often not very stable with respect to changes in the underlying distribution or small modifications. Both things necessitate a better understanding of Neural Networks, the characterisation of their loss landscape and the development of new computational schemes.

3 Key Insights

1. Machine Learning Is Built Around Data

Machine Learning is not as new as people think it is, it spanned at least 60 years of research and development. It comes up with algorithms by using data and learning from it. Some of the products that come out from Machine Learning are email spam detectors, Google’s search recommendations, and business insights on how to efficiently store goods, deliver your orders or efficiently take you from one place to the other with services like Uber, Grab or Lyft.

2. Neural Network Is Key to AI’s Future

When you think of AI and its potential to be human-like, Neural Network is what you might have in mind. It is inspired and modelled loosely on our brain’s biological neural network, where it’s designed to recognise patterns. For example, by analysing a sample image of a human face, it’s able to gather the facial features and use it to identify other faces but of course, has its limits as I’ve mentioned.

3. Read Up Credible Sources Online

Don’t stay confused about the details and technicalities, go out there and actively read up online. There are many resources available on the web. To get a better understanding of AI, look out for information from technical experts who have a clear knowledge of the fine distinctions between these technical concepts.

Subscribe to view content

Sign up for our newsletter and get useful change strategies sent straight to your inbox.