POWER READ

GenAI Explainability: Unlocking the Black Box

Gain Actionable Insights Into:

- Implementing visual explanations like token highlighting and chain of thought reasoning to build user trust

- Utilizing the RAG triage method to detect and mitigate hallucinations in your AI applications

- Applying key metrics to ensure the quality and reliability of LLM-generated content

The Imperative of Explainable GenAI

In the rapidly evolving landscape of artificial intelligence, Large Language Models (LLMs) have emerged as game-changers. However, as these models become more sophisticated, the need to explain their outputs grows exponentially. This is where the concept of explainable AI becomes crucial.

Imagine you're in a high-stakes meeting, presenting the results of an LLM-powered analysis to your board of directors. You confidently state that your AI predicts a 15% increase in market share over the next quarter. Suddenly, a board member asks, "How did the AI arrive at this conclusion?" Without a solid understanding of explainable AI, you might find yourself stumbling for an answer.

This scenario underscores why explainability isn't just a technical nicety—it's a business imperative. To understand this further, let’s dive into some of the key elements.

Let's break down the vital components of GenAI explainability:

-

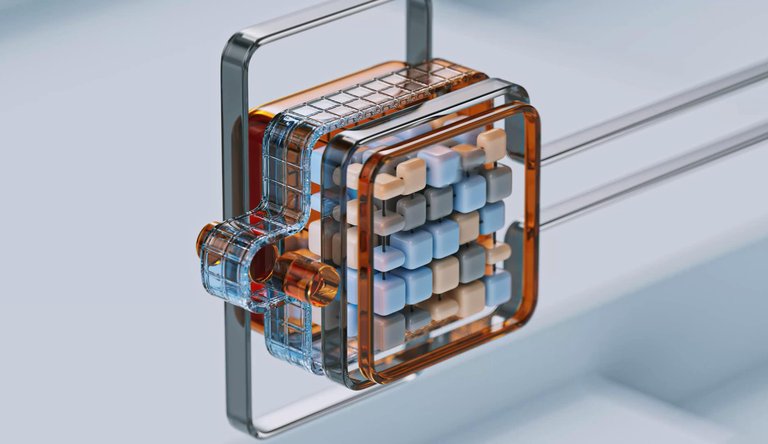

Model Interpretability: This is about demystifying what happens inside the "black box" of LLMs. When you're working with models like GPT-3 or Llama, you need to be able to explain, in simple terms, how these models arrive at their outputs.

-

Visual Explanations: These are powerful tools in your explainability arsenal. They include techniques like token highlighting, which visually shows which parts of the input were most influential in generating the output.

-

Trust Building: At its core, explainable AI is about fostering trust. When you can show stakeholders how an AI system arrived at a particular conclusion, you're not just sharing information—you're building confidence in your AI-driven processes.

Strategies for Implementing Explainable GenAI

Now that we understand the importance of explainable AI, let's dive into practical strategies you can implement in your organization.

Leveraging Visual Explanations

Visual explanations are one of the most powerful tools at your disposal. Here's how you can use them effectively:

- Token Highlighting: As mentioned, this technique involves visually emphasizing the parts of the input text that were most influential in generating the output. For example, if your LLM is analyzing performance appraisals to identify areas for improvement, you can highlight the specific phrases in the feedback that led to the AI's recommendations.

- Chain of Thought Reasoning: This approach involves showing the step-by-step reasoning process the AI used to arrive at its conclusion. It's particularly useful for complex decision-making tasks.

In effect, showing the AI's thought process isn't just about transparency—it's about empowering your team to understand and critically evaluate AI outputs.

Implementing the RAG Triage Method

Next, the Retrieval-Augmented Generation (RAG) triage method is a powerful approach to enhance the explainability and reliability of your LLM applications. Here's how you can implement it:

- Query Processing: When a user asks a question, your system should first process and understand the query.

- Knowledge Retrieval: Based on the query, retrieve relevant information from your knowledge base.

- Response Generation: Use the LLM to generate a response based on the retrieved information and the original query.

- Explainability Layer: This is where you add transparency. Show which parts of the knowledge base were used to generate the response. This not only explains the AI's decision-making process but also builds trust in the system.

Applying Key Metrics for Quality Assurance

With the aforementioned method in mind, to ensure the reliability of your LLM outputs, you must focus on these three key metrics:

- Context Relevance: Measure how well the retrieved information matches the user's query. This ensures that your AI is drawing from appropriate sources.

- Groundedness: Assess how factual the AI's response is. This is crucial for detecting and preventing hallucinations—instances where the AI confidently provides incorrect information.

- Answer Relevance: Evaluate how directly the AI's response addresses the original question. This ensures that your AI stays on topic and provides valuable information.

By consistently measuring and optimizing these metrics, you can significantly enhance the quality and trustworthiness of your AI system's outputs.

Interpreting Model Outputs: Global vs. Local Explainability

When it comes to interpreting LLM outputs, it's crucial to understand the distinction between global and local explainability:

- Global Explainability: This refers to understanding how the entire system works. For instance, if you're using the RAG framework, global explainability involves explaining the overall process of retrieving information and generating responses. This high-level understanding is crucial for stakeholders who need to grasp the big picture of your AI system.

- Local Explainability: This focuses on explaining individual responses. It involves applying the metrics we just discussed—context relevance, groundedness, and answer relevance—to specific outputs. Local explainability is key for users who need to understand why the AI gave a particular response.

By addressing both global and local explainability, you're not just operating a black-box system. Instead, you're providing a comprehensive explanation of how your AI works, from the overall architecture down to individual decisions. This approach builds trust and confidence for both end-users and LLM application owners.

Steps to Take in the Next 24 Hours

- Audit Your Current AI Explainability Practices: Take a close look at your existing AI systems. Are you able to explain how they arrive at their conclusions? Identify areas where transparency is lacking and prioritize these for improvement.

- Implement a Visual Explanation Tool: Choose one of your AI applications and implement a basic form of visual explanation, such as token highlighting. This will immediately enhance the interpretability of your AI outputs.

- Set Up a RAG Triage Pilot: Select a small-scale project within your organization to pilot the RAG triage method. Implement the four steps: query processing, knowledge retrieval, response generation, and the explainability layer. Monitor the results and gather feedback from users to refine the system.

Ultimately, explainable AI is a vast and rapidly evolving field. While we've covered some key concepts here, we’ve only scratched the surface. By staying committed to transparency, continually tracking key metrics, and adapting your explainability strategies to your specific needs, you can help your organization harness the full potential of LLMs while maintaining the trust and confidence of your stakeholders.

Subscribe to view content

Sign up for our newsletter and get useful change strategies sent straight to your inbox.